Journal of Medical Sciences and Health

DOI: 10.46347/jmsh.2017.v03i02.002

Year: 2017, Volume: 3, Issue: 2, Pages: 5-10

Original Article

Assad Ali Rezigalla1, Asim Mohammed Abdalla2, Heitham Mutwakil Mohammed3, Muntaser Mohammed Alhassen4, Mohammed Abbas Mohammed5

1Department of Anatomy, College of Medicine, University of Bisha, Saudi Arabia,

2Department of Anatomy, College of Medicine, King Khalid University, Saudi Arabia,

3Department of Anatomy, College of Medicine, Jazan University, Saudi Arabia,

4Faculty of Applied Sciences, Jazan University Saudi Arabia,

5Department of Pediatric, College of Medicine, University of Bisha

Address for correspondence:

Assad Ali Rezigalla, Department of Anatomy, College of Medicine, University of Bisha, Saudi Arabia. Phone: 00966500669178. E-mail: [email protected]

Background: Assessment drives learning. Continuous assessment (CA) is used to enhance sustained students’ learning with quality. It has many advantages and some disadvantages. This work aims to study student perceptions toward CA and its implementation and correlate it with their academic achievements.

Materials and Methods: To evaluate student’s perceptions toward CA a questionnaire was developed. The data obtained from both the questionnaire and student’s results were analyzed.

Results: CA helped students in learning (100%), identifying areas of weakness (20.3%), and answering the final exam (5.8%). Time intervals between the tools of assessment were not enough for 89% of the students, and 98.3% responded that the used tools were enough for evaluation. Marks distribution of the CA was good for 73.8% of the students. The majority of the students (97.1%) reported that CA did not pose an extra academic load. All the students (99.4%) preferred the use of the CA as part of the assessment and 94.8% of the students preferred replacing the final exam with the CA.

Conclusions: CA is recently implemented. Divergent responses of students can be explained by the lack of student experience and familiarity. Tools of assessment should be planned carefully. Students prefer the use the CA instead of the single final examination. Students view about CA is representing its core philosophy.

KEY WORDS:Continuous assessment, student perception, tools of assessment.

The aim of the assessment is to test student’s acceptance toward the teaching process.[1] Accordingly, students modify their learning methodologies according to the assessment tools.[2] Thus, assessment drives learning.[3,4]

The philosophy behind the continuous assessment (CA) is to enhance sustained students’ learning with quality[5] rather than a method of assessment.[6,7] The CA as a method of evaluation can function both as summative and formative.[8,9] Uses of the CA have a positive impact on both the academic achievement and the psychological status of students.[6,8,10] It also, gives a feedback to students on their learning,[11] although an excessive use of the CA can affect both the academic achievement and the feedback from the students.[12]

CA has many advantages[5,8,13] such as guidance, orientation, and diagnosis of areas of the learner’s weaknesses. In addition, it gives feedback to both the teachers and students based on their performance. It allows teachers and students to focus on the unsatisfactorily covered knowledge or mastered skills and permits intermittent interactions between students and teachers. Its disadvantages include loss of student’s interest as they are examined frequently, but the major disadvantage of CA is that students do not have an opportunity to integrate knowledge and skills acquired in the whole semester. Problems of CA are related to the academic staff and both the practice and implementation of the CA.[5] Problems associated with the academic staff include skills in test construction, administration, and attitude toward CA. Practice and implementation problems include a time interval and numbers and types of tools used in the CA, the amount of the covered knowledge or skills and record of results.

This work aims to study the student perceptions toward the CA and its implementation and correlate it with their academic achievements.

Background

This study was performed during the period from January to February 2015 in the College of Medicine, King Khalid University. The college offers MBBS degree after the successful completion of 12 academic semesters. The teaching plan of the college is based on the traditional curriculum. In each semester, students have two successive continuous exams and an assignment as CA, which represents 50% of the final result (FR). Final assessment (FA) at the end of the semester represents the other 50% of the FR. Different tools of assessments are used in the CA in the preclinical courses such as quizzes, electronic tests, written, and practical assignments.

Methods

To evaluate student’s perceptions toward CA, a standardized structured questionnaire was developed. The questionnaire formed of close-ended questions and Liker’s scale (five points). Questions in the questionnaire were filed in three categories. The first category is the main function of the CA and includes its help in learning and study seriously, identifies areas of weakness and answering the final exam. The second category is the implementation. These questions include time interval, number of tools used and their adequacy to evaluate students and percentage of the CA and marks distribution between tools. The last category includes the preference of the uses of the CA and if it causes an additional academic load. The questionnaire was tested in a pilot study to test the internal validity using Cronbach’s alpha test (0.89). Necessary modifications were made. Data generated from the pilot study was not included in the analysis.

The study was approved by the ethical committee (college of medicine, KKU). Inclusion criterion applies to registered students in level four anatomy courses (170 students, total coverage) those who accepted to participate after filling written consent. Exclusion criteria included students who missed any part of the CA or refused to participate in the study.

Data analysis

The data obtained from both the retained questionnaire and student’s results in the CA, FA, and FR were analyzed using SPSS software (IBM SPSS Statistics for Windows, Version 20.0. Armonk, NY: IBM Corp. USA). T-test was used for measuring the significant differences. P < 0.05 was considered significant.

Results

Out of 170 questionnaires distributed to the students, 144 (84.7%) were filled and returned.

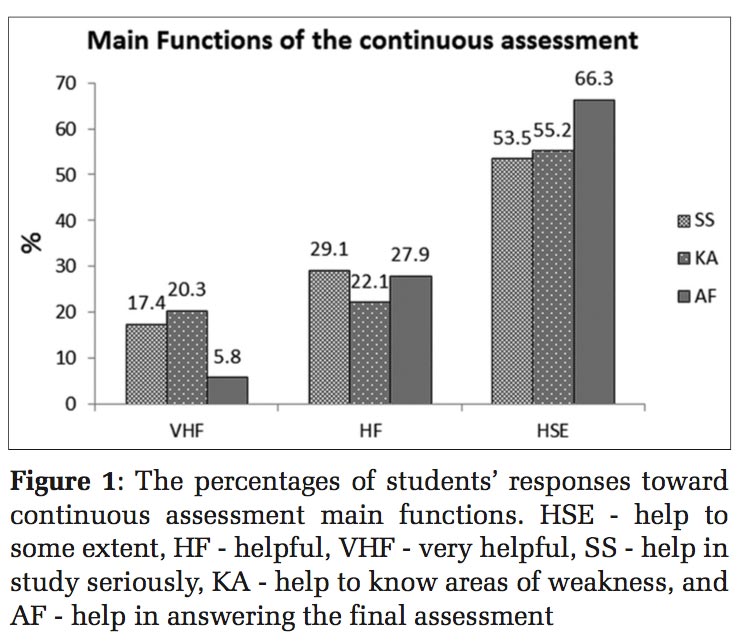

All students responded that CA helped them in learning (144, 100%). Less than one-third of the students found the CA was very helpful in studying seriously (25, 17.4%), identifying areas of weakness (29, 20.3%), and answering the final exam (8, 5.8%) [Figure 1]. The majority of the students responded that CA to some extent, helped them in studying seriously (76, 53.3%), identified areas of weakness (79, 55.2%), and in answering the final exam (95, 66.3%).

Time intervals between the tools that used in the CA were not enough for (128, 89%) of the students and (141, 98.3%) responded that the used tools of CA were enough for evaluation. The percentage and marks distribution of the CA was good for (106, 73.8%) of the students. The majority of the students (139, 97.1%) reported the CA did not pose an extra academic load. All the students (143, 99.4%) preferred the use of the CA as part of the assessment and (136, 94.8%) of the students preferred replacing the FE with the CA. Most of the students preferred the use of MCQs in the CA (50, 35.3%) followed by Blackboard assignments (32, 22.3%) [Figure 2].

Success rate (SR) in the CA was higher (138, 96.3%) than both of the FA (134, 93.2%) and FR (115, 80.3%) and (104, 72.7%) of student passed both the CA and FA. All of the students failed in the CA failed also in the FA, and only 2.9% (five students) failed in the FA succeed in the FR. High correlation was found between FA and FR (138, 96.27%) than between CA and the FR (131, 91.61%). We observed statistically significant difference between FA and FR P = 0.0001.

The CA has been used earlier as a part[8] or as a single method of the student’s assessment.[14] The college of medicine (KKU) recently implemented the CA. This may influence the student’s views about the assessment, as only 25.9% of students preferred it. In contrast, the majority of the students reported that the CA would not form an extra load on them. These divergent responses can be explained by the lack of student experience and familiarity with CA as it was newly implemented.

From the practical point of view, CA does not pose an extra load on the students because it consists of different tools of assessment. The tools that can be used in the CA includes quizzes, practical tests and assignments either written or practical.[15] Each tool targets different learning outcomes. Moreover, the CA should not be implemented as an isolated entity but, carefully planned to detect the acquired knowledge and skills among students.[15] Difference in the used tools of assessment leads to questions about time intervals, the numbers of tools, and the suitable tool at suitable time.[16] The majority of students (88.7%) were considering the time interval between assessments was not enough. Although they considered the numbers of tools used were enough for student’s evaluation.

CA with varying tools has a formative and a summative functions.[8] It has been used as a single method of assessment long ago[17] which is accepted and supported by the students.[14] These findings were in agreement with earlier studies as 93.5% of the students agreed to use the CA instead of the single final examination. These imply that the CA appears to the student’s as small exams that focused on targeted amount of knowledge or skills and students can monitor their achievements and predict their needs. This view of students is the core philosophy of the CA.

Students described the CA as helpful to some extent to study seriously, identifying areas of weakness, and answering in the final exam. The CA was designed to put student in an on-going process of learning and not waiting to the end of the course to exert their efforts.[5] Thus, it should have an effect on the overall academic achievement. The success rate in CA was high (95.3%) and decreased in the FA (93.2%), and less in the FR (80.3%). Further, 72.7% of the students who passed the CA succeeded in both the FA and the FR. These results of academic achievement identified the great impact of the CA. Many studies approved this impact and even supported the presence of psychological satisfaction.[6,8,13,14,18]

Inspite of the different tools that were used in the CA, the use of MCQs is the common.[19,20] Student’s responses support the uses of MCQs in CA; which is superior to Blackboard tools. Even with the best construction of MCQs, the factual knowledge is tested,[21] and there is little coverage of higher order thinking skills.[20,21] For both teachers and administration, MCQs are easily implemented and have a high degree of validity and reliability.[22]

CA allows the students to monitor their progress and identify their need to improve with a dimension of student-centered assessment.[15,23] Applying new curricula definitely needs a change of assessment methods which must be in accordance with an aligned type of the curriculum.[14]

CA is newly adopted in College of Medicine, KKU. According to student’s response, CA completed its main function as helping students in learning and studying. Students’ response about implementation of the CA is good although the time interval was not enough. CA has a positive impact on students’ results.

Subscribe now for latest articles and news.